Experts offer advice on strategically approaching multicloud development, from balancing features from disparate clouds to mastering observability and automation.

If the features of one cloud environment are a business benefit, deploying multiple clouds has to be even better, right?

It’s true that a multicloud architecture promises to give you the best of all possible worlds, letting you take advantage of the specialized features of multiple cloud providers — but there’s a catch. It’s true only if your development practices are ready for the challenge.

Writing code for multiple clouds is a strategic, architectural, and operational shift from traditional cloud computing. From container orchestration to observability to internal tooling, every part of the development process needs to evolve to match the complexity of your infrastructure.

We spoke to engineering leaders and architects who are getting it right — and who admit they sometimes get it wrong. Here’s what they’ve learned.

Plan your multicloud attack

Before your development teams write a single line of code destined for multicloud environments, you need to know why you’re doing things that way — and that lives in the realm of management.

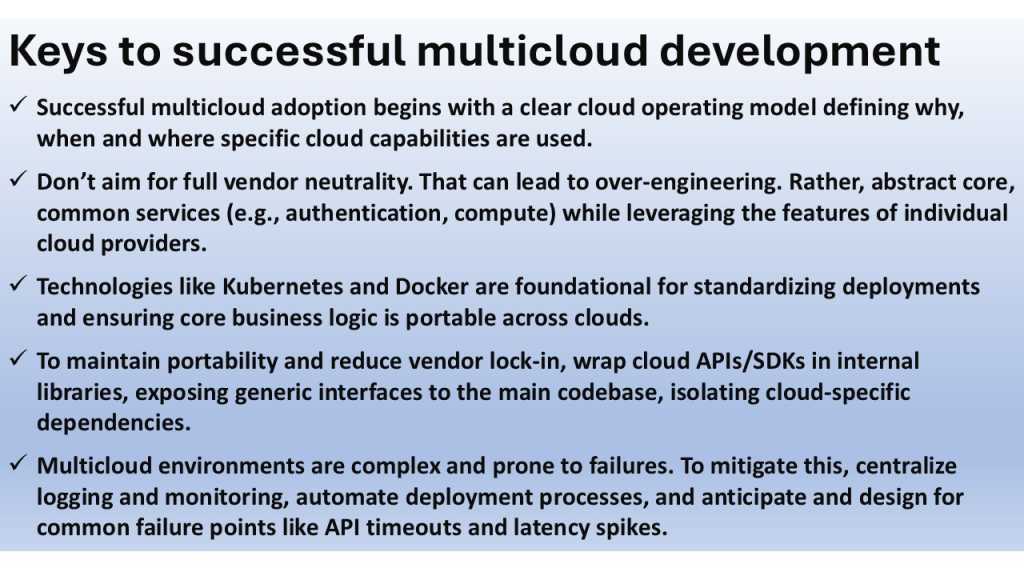

“Multicloud is not a developer issue,” says Drew Firment, chief cloud strategist at Pluralsight. “It’s a strategy problem that requires a clear cloud operating model that defines when, where, and why dev teams use specific cloud capabilities.” Without such a model, Firment warns, organizations risk spiraling into high costs, poor security, and, ultimately, failed projects. To avoid that, companies must begin with a strategic framework that aligns with business goals and clearly assigns ownership and accountability for multicloud decisions.

Running a multicloud environment offers clear benefits in terms of features and flexibility, but it’s a complex process. Here five things you need to know.

IDG

This process shouldn’t just be top-down. Heather Davis Lam, founder and CEO of Revenue Ops, emphasizes the need for cross-functional communication. “Talk to each other,” she says. “Multicloud projects involve developers, ops, security, sometimes even legal. Problems usually come from miscommunication, not bad code. Regular check-ins and honest conversations go a long way.”

This planning process should settle on the question of why multicloud is a good idea for your enterprise, and how to make the best use of the specific platforms within your infrastructure.

“The ultimate paradox of multicloud is how to optimize cloud capabilities without creating cloud chaos,” Firment says. “The first rule of thumb is to abstract the core shared services that are common across clouds, while isolating cloud-specific services that deliver unique customer value. For example, use a standard authentication and compute layer across all clouds while using AWS to optimize the cost and performance of queries on large datasets using Amazon S3 and Athena.”

Generic vs. specific cloud environments

The question of when and how to write code that’s strongly tied to a specific cloud provider and when to write cross-platform code will occupy much of the thinking of a multicloud development team. “A lot of teams try to make their code totally portable between clouds,” says Davis Lam.

“That’s a nice idea, but in practice, it can lead to over-engineering and more headaches.” Davis warns against abstracting infrastructure to the point that development slows and complexity increases. “If you or your team find yourselves building extra layers just so that this will work anywhere, it’s a good moment to pause.”

Patrik Dudits, senior software engineer at Payara Services, agrees. He says excessive abstraction as a common but misguided attempt at uniformity: “One common mistake is trying to limit your architecture to the ‘lowest common denominator’ of cloud features. In practice, embracing the strengths of each cloud is a more successful strategy.”

Dudits advocates for designing systems with autonomy in mind — where services can operate independently in their respective clouds rather than being yoked together by a need for identical implementation.

This principle of autonomy, rather than strict uniformity, also plays a central role in how Matt Dimich, VP of platform engineering enablement at Thomson Reuters, approaches multicloud design. “Our goal is to be able to have agility in the platform we run our applications on, but not total uniformity,” he says. “There is innovation in less expensive, faster compute every year, and the quicker we can take advantage of that, the more value we can deliver to our customers.” Dimich stresses a balanced approach: leveraging the native services of individual cloud services where it makes sense while still keeping a watchful eye on avoiding tight coupling.

Pluralsight’s Firment also sees the need for balance. He says that “the ultimate paradox of multicloud is how to optimize cloud capabilities without creating cloud chaos. The first rule of thumb is to abstract the core shared services that are common across clouds, while isolating cloud-specific services that deliver unique customer value.” For example, you might standardize authentication and compute layers while taking advantage of AWS-specific tools like Amazon S3 and Athena to optimize data queries.

Similarly, Davis Lam suggests dividing business logic and infrastructure. “Keep the core business logic portable — APIs, containerized apps, shared languages like Python or Node — that’s where portability really matters,” she says. “But when it comes to infrastructure or orchestration, I’d say lean into what the specific cloud does best.”

Dudits agrees: “Multiple clouds are leveraged because there is clear advantage for a specific task within an intended application,” he says. “Simply mirroring the same stack across providers rarely achieves true resilience and often introduces new complexity.”

Writing cross-platform code

What’s the key to making that core business logic as portable as possible across all your clouds? The container orchestration platform Kubernetes was cited by almost everyone we spoke to.

Radhakrishnan Krishna Kripa, lead DevOps engineer at Ansys, has helped build Kubernetes-based platforms that span Azure, AWS, and on-prem environments. “Use Kubernetes and Docker containers to standardize deployments,” he says. “This helps us write code once and run it in AKS, AWS EKS, or even on-prem clusters with minimal changes.”

Sidd Seethepalli, CTO and co-founder of Vellum, echoes that view. “We rely on Kubernetes rather than provider-specific services, allowing us to deploy consistently anywhere a Kubernetes cluster exists.” Vellum uses templated Helm charts to abstract away cloud-specific configurations and employs tools like KOTS to simplify deployment customization.

For Neil Wylie, principal solutions architect at Myriad360, Kubernetes is just the foundation. “Building on Kubernetes allows me to standardize application definitions and deployments using Helm, typically automating the rollout via a GitOps workflow with tools such as ArgoCD,” he says. This approach offers “true workload mobility” while ensuring consistent, validated deployments through CI/CD pipelines.

Speaking of CI/CD, the tools that power your code’s development pipelines matter just as much as the infrastructure your code will run on runs on. Kripa recommends standardizing pipelines using cloud-neutral tools like GitHub Actions and Terraform Cloud. “Design your pipelines to be cloud-neutral,” he says.

“We primarily use Azure, but tools like GitHub Actions allow us to manage builds and infrastructure across multiple environments with a consistent workflow.” This consistency helps reduce the burden on developers when moving between providers or deploying to hybrid environments.

No matter how much you standardize your code, however, you’ll still have to interact with APIs and SDKs of individual cloud providers. Anant Agarwal, co-founder and CTO at Aidora, has a pattern to do that without sacrificing portability: adapter layers. “We treat every cloud API or SDK like a dependency: We wrap it in an internal library and expose a clean, generic interface to the rest of the codebase,” Agarwal says. This approach keeps cloud-specific logic isolated and swappable, making core application logic easier to maintain and more resistant to platform lock-in.

The open-source community is also helping fill in the gaps, especially where proprietary cloud features have historically created friction. “I like to keep an eye on the CNCF landscape to see the emerging projects — generally, what you notice is that it’s exactly these ‘sticky’ points that the new projects try to solve for,” says Wylie, pointing to the Serverless Workflow project as an example.

Conquering with multicloud complexity

As it’s no doubt become clear, heterogenous multicloud environments are complex, and your development process will need to accommodate that. Visibility is particularly important, and getting it right starts with centralizing your logs and alerts. “We route all logs to a unified observability platform (Datadog), and create a consolidated view,” says Aidora’s Agarwal. “Perfect coverage is tough with newer tools, but centralization helps us triage incidents fast and keep visibility across cloud providers.”

Payara’s Dudits emphasizes a similar approach. “We recommend investing in a central, provider-neutral dashboard for high-level metrics across your multi-cloud estate,” he says. “This unified view helps developers and ops teams quickly spot issues across providers, even if deeper diagnostics are still done through provider-specific tools.”

For Revenue Ops’ Davis Lam, good logging is one of the most critical tools in a multicloud environment. “It’s tough enough to debug one cloud. When you’re working across three or four, good logging and monitoring can save you hours — or days — of work. Get it right early,” she says. But she cautions against collecting logs and setting alerts just for the sake of it. “A big tip is to think about what should actually retry and what should just fail and alert someone. Not every failure should automatically trigger a retry loop or fallback. Sometimes it’s better to let a process stop and get someone’s attention.”

Automation is another tool that can tame multicloud development environments. “Deployment processes need to be bulletproof because coordinating across providers is error-prone,” Agarwal says. “We automate everything using GitHub Actions to ensure schema changes, code deploys, and service updates go out in sync.”

Agarwal also noted that internal AI tools can streamline complex multicloud workflows. “We’ve turned our internal playbooks into a custom GPT that answers context-specific questions like ‘Where do I deploy this service?’ or ‘Which provider handles file uploads?’ instantly,” he says. “To reduce friction further, we’ve codified the same rules into Cursor so developers get inline guidance right inside their IDE.”

Ultimately, the biggest takeaway might be to simply plan for failure. “The more clouds and services you tie together, the more chances there are for something to break — usually in the spots where they connect,” says Davis Lam. “So things like API timeouts, auth tokens expiring, or just weird latency spikes become more common. You’ll want to expect those kinds of failures, not treat them as rare events. Think about what should actually retry and what should just fail and alert someone. Not every failure should automatically trigger a retry loop or fallback. Sometimes it’s better to let a process stop and get someone’s attention.”

“At the end of the day, multicloud development is messy — but if you expect that and plan for it, you’ll write better, stronger code,” she adds. “Assume things will break and build with that in mind. It’s not pessimistic, it’s realistic.”