Databricks has added a new agent, the Data Science Agent, to the Databricks Assistant, in an effort to help data practitioners automate analytics tasks.

The agent, which is available now in preview and is expected to be rolled out soon to enterprise customers, can be toggled from inside the Assistant window in Notebooks and the SQL Editor, and builds on the Assistant’s functionality to accelerate users’ work, the company said in a blog post.

It said that data practitioners can use the agent for tasks such as data exploration, training machine learning models, and diagnosing and fixing errors.

“You can ask the agent to ‘perform exploratory data analysis on @table to identify interesting patterns’. You can provide additional guidance if you want to focus the exploration on a particular area,” the company said.

For machine learning tasks, the agent can be asked to “train a forecasting model predicting sales in @sales_table”, it added.

With this offering, the company is joining many other analytics software providers in adding agents to help enterprises automate tasks; hyperscalers like Google and Microsoft are integrating similar capabilities into their own data infrastructure services, and Databricks’ biggest rival, Snowflake, is also adding agents to its portfolio of offerings.

Comparing the Databricks Assistant and the Data Science Agent, Forrester VP and principal analyst Charlie Dai said that the new agent upgrades the Assistant from a code-generation copilot to an autonomous agent capable of planning, executing, and iterating on multi-step workflows.

And, noted Samikshya Meher, practice director at Everest Group, the addition of the new agent will cut down the time spent on tedious but necessary steps like data cleaning, model training, and error detection.

“Instead of juggling these repetitive tasks, data practitioners can focus on higher-value analysis… The net efficiency gain comes from both reduced cycle times in development and improved alignment between analytical output and business decision-making needs,” Meher said.

Databricks expects to soon add new capabilities to the Data Science Agent, such as broader context via MCP integration, smarter memory, and faster data discovery, but the company didn’t provide a timeline.

However, it added, “agent mode will grow to orchestrate entire workloads across Databricks. We’re building towards agent workflows for data engineering and beyond.”

To try out the new agent, workspace admins must enable the Assistant agent mode beta from the Databricks preview portal. Once agent mode is enabled, users will be able to toggle the agent from within the Assistant.

The Rust Foundation, steward of the Rust programming language, has launched the Rust Innovation Lab, offering fiscal sponsorship to relevant, well-funded open source projects. The inaugural hosted project is Rustls, a memory-safe, high-performance TLS (Transport Layer Security) library, the foundation said.

Announced September 3, Rust Innovation Lab sponsorship includes governance, legal, networking, marketing, and administrative support. Creation of the lab comes at a pivotal moment, the foundation said. Rust adoption has accelerated across both industry and open source, and many projects written in Rust have matured into critical pieces of global software infrastructure, the foundation stressed. With Rust becoming more deeply integrated in everything from cloud platforms to embedded systems, a growing need has arisen for neutral, community-led governance, the foundation said. Reliable institutional backing will support these projects in remaining sustainable, secure, and vendor-independent, the foundation added.

The inaugural sponsored project, Rustls, responds to a growing demand for secure, memory-safe TLS in safety-critical environments. The library is used to build secure connections in the Rust ecosystem, the foundation said. Rustls demonstrates Rust’s ability to deliver security and performance in one of the most-sensitive areas of modern software infrastructure, the foundation said.

The Rust Foundation welcomes funded open source projects to learn more at RustFoundation.org. Projects in the lab gain visibility across the Rust ecosystem, increasing their ability to attract contributors, partners, and financial support. Participants also benefit from peer networking opportunities to share lessons and best practices, said the foundation.

The upcoming version of PostgreSQL 18, the latest release of the popular open-source database due later this month, will introduce performance-enhancing features, promising significant gains for online transactional processing (OLTP) workloads.

However, according to industry experts, PostgreSQL 18 falls short in AI readiness despite rapid proliferation.

PostgreSQL has become a go-to database for developers, especially for building modern applications in a cost-effective manner, as it offers a rich feature set, extensibility, and a permissive license that supports enterprise adoption.

However, PostgreSQL has one Achilles’ heel — it is not effective for online analytical processing (OLAP), which impedes AI readiness as enterprises increasingly adopt hybrid transactional/analytical processing (HTAP) architectures to support AI workloads, specifically agentic AI.

HTAP, which merges OLTP and OLAP capabilities in a single system, is essential for agentic systems as it provides the agent more context for better decision-making by providing real-time analytics on live transactional and operational data.

“…there are no features in PostgreSQL 18 which specifically benefit analytical and AI workloads,” Alastair Turner, technology evangelist at Percona, a provider of PostgreSQL and other databases.

The lack of features for OLAP performance is so evident that PlanetScale co-founder and CEO Sam Lambert suggests that “it’s best to separate concerns and choose a dedicated OLTP database (PostgreSQL) and add on analytics databases like ClickHouse, BigQuery, etc, as needed.”

“Having separated compute and storage resources dedicated to each purpose reduces the risk of contention (competition of workloads for shared resources, such as CPU) being a problem and negatively impacting application performance,” Lambert explained.

PlanetScale, too, offers a managed version of PostgreSQL.

However, in contrast to Turner and Lambert, PostgreSQL-based database provider firm EDB’s SVP of database servers and tools, Tom Kincaid, is a bit more optimistic about PostgreSQL 18’s OLAP capabilities, as he said that every release expands its range of analytics use cases.

“This release (PostgreSQL 18), for example, supports asynchronous data reads and many optimizer enhancements. These improvements accelerate performance for large data sets and queries that involve many tables — exactly the kinds of workloads frequently involved in OLAP,” Kincaid said.

Earlier releases, according to Kincaid, have added features such as Foreign Data Wrappers (FDWs) and Table Access Method (TAM) to enable more analytical workloads.

While FDWs act as a bridge that enables PostgreSQL to send queries to a remote data source and receive the result, TAM is a feature that allows users to change data access settings and configure it for OLAP: read-heavy and often involving complex aggregations over large datasets.

Performance gains for OLTP

PostgreSQL 18, though lacking in AI readiness, introduces a few features that experts expect will result in significant performance gains for OLTP workloads.

One such feature, according to Lambert, is Asynchronous I/O or AIO in short.

“The use of Asynchronous I/O via ‘io_uring’ Linux interface should really help improve IO performance, leading to lower latencies. This is a big win for OLTP, where time is everything… We will likely see especially good gains here in environments with network-attached storage,” Lambert said.

Asynchronous IO, according to Percona’s Turner, allows database workers to issue multiple IO instructions without waiting for earlier instructions to complete, and this delivers performance gains by not blocking processing while waiting for subsequent IO operations to start.

However, AIO for now supports disk-heavy reads and not writes, experts pointed out, adding that work is underway on improving bulk writes and checkpoint writes. Examples of write-heavy OLTP workloads are vehicle telemetry, social media, and online gaming platforms.

Another feature that will help PostgreSQL 18’s performance in indexing and caching is the upgrade of universally unique identifier (UUID) from version 4 to 7.

UUID is a 128-bit value used in PostgreSQL to provide a globally unique identifier for records, and UUID 7 differs from UUID 4 in the way it stores the 128-bit value, according to Tuner.

Unlike UUID 4, which is fully random, UUID 7 starts with a timestamp, and this means recent UUID 7 values are close together in sort order, so they’re stored on fewer, consecutive index pages, Turner said, adding that this improves cache efficiency, since active data is more likely to stay in memory.

“In contrast, UUID 4 scatters recent values across many pages, making caching less effective.”

Other notable features

PostgreSQL also comes with other notable features, such as improved Explain and OAuth.

The improved Explain command, according to EDB’s Kincaid, should assist DBAs and developers in identifying situations where a query needs to be tuned and how it should be tuned, resulting in faster troubleshooting, optimized systems, and better performance.

“The more information you have regarding how the query planner executed or will execute your query, the more information you have to make adjustments to the statistics, indexes, configuration settings, and the query itself,” Kincaid said.

The OAuth feature, on the other hand, will significantly improve security, according to experts.

“It should enable Postgres to integrate much more easily with corporate identity management systems and will help eliminate a lot of duplication of effort and potentially error-prone processes while also strengthening security by aligning Postgres with the same identity and access controls used across the enterprise,” Kincaid said.

A complete list of changes made in PostgreSQL can be found in its release notes.

Shortly after Reuters broke the news that Meta, the company behind Facebook and Instagram, had signed a $10 billion, multiyear cloud deal with Google, a CIO friend called me with some questions: “David, should we be doing something like this? Are these mega prepurchase cloud agreements the new table stakes for enterprises?” The question is as pressing as it is complex. My answer, shaped by years of watching and guiding digital transformation, may not be what cloud vendors want you to hear.

Here is the current situation, my advice, and its rationale.

Why Meta made a deal

The headlines make it look simple. Meta is spending more than $10 billion over six years with just one cloud provider, betting on the value of stability, scale, and access to the very latest infrastructure. In their view, this isn’t just a purchase. It’s a strategic investment in the future of AI and digital services. CEO Mark Zuckerberg has been very public about Meta’s intent to build massive, world-dominating AI data centers. That kind of ambition almost demands a high-profile, high-commitment partner.

Should everyone else follow Meta’s lead? The short answer is no. What makes perfect sense for Meta may be a minefield for most enterprises.

Meta’s environment is unusually unified. Its workloads are highly engineered for scale, and the company can afford to run a single technology stack tailored to a specific cloud vendor’s offerings. This makes it easy for them to leverage the deepest discounts, build close relationships with their chosen provider, and manage everything in a coordinated, relatively streamlined way.

But most enterprises look nothing like Meta. Instead of a single, coherent stack, large businesses tend to operate a patchwork of legacy systems, cloud-native apps, and recently acquired technologies, all connected by years of changing business priorities, vendor deals, and hurried digital transformation projects. That’s where comparisons break down—and where real risks of these mega deals begin.

Lock-in versus big discounts

The biggest carrot in these long-term cloud contracts is the promise of lower costs. Cloud providers love to dangle deeper discounts, exclusive services, and premium support in front of enterprises in hopes of getting a large, up-front investment and a pledge of long-term loyalty. It’s tempting to think that the same tricks that work for buying bulk office supplies might apply to your digital backbone.

Here’s what many enterprises overlook: Every time you sign one of these massive agreements, you’re also becoming tightly connected to a single ecosystem. Your architecture, processes, and even your people begin to specialize in that specific provider’s way of doing things. Proprietary APIs, custom infrastructure management, and unique security models initially provided a tactical edge but can quickly turn into operational constraints if (or when) the industry changes.

Technology never stands still. What looks like the obvious best choice today could be obsolete in as little as 18 months. New regulations appear, innovative technologies disrupt the market, businesses merge or split, and priorities shift. When any of these inevitable changes happen, an enterprise locked into a six-year, multi-billion-dollar contract has very few good options, especially if the contract was structured to maximize early savings over long-term flexibility.

Meta doesn’t face these problems. Its unified stack and precise goals make change less likely and migration much simpler if it ever becomes necessary. In contrast, most enterprises operate in a world of constant churn. No provider is best-in-class across every key requirement, and even your most important priorities may shift during the life of a long-term deal.

The result? Discounted pricing rarely makes up for the value lost when flexibility and leverage disappear. If a new cloud technology emerges that could boost your productivity or cut your costs, you want to be able to adopt it without paying a massive penalty or undergoing a disruptive technical migration. If you’re locked in, that’s often impossible.

Keep your options open

So, what should CIOs, CTOs, and IT leaders do when presented with these big, bold offers? My advice remains consistent: Prioritize agility over price and seek flexibility wherever you can. Treat the cloud as a toolkit, not a destination.

This means resisting the siren call of large, long-term, single-provider deals. Instead, look for strategies that leave room to maneuver. Multicloud architectures—using different cloud providers for different applications or workloads—allow you to choose the best services for each job. Shorter-term contracts or reserved instances can offer savings without locking you in for years. Also, keep a close eye on industry standards and vendor-neutral technologies such as Kubernetes, containers, or open APIs. These make it easier to move workloads or adopt new providers as your needs evolve.

Another advantage of a more flexible approach is that it fosters a culture of continuous optimization. Instead of making a choice once every few years and hoping for the best, your teams stay focused on constant improvement, always asking, “Could we do this faster, more securely, or more cost-effectively somewhere else?” Vendors know their business with you is never guaranteed, which makes them more likely to provide real value year after year, not just at renewal time.

It’s also worth thinking about the “unknown unknowns.” During periods of business growth, regulatory shifts, or unexpected events (mergers, acquisitions, divestitures, compliance requirements, or major market changes), the most successful organizations are those that can respond quickly. Being stuck with a long-term cloud deal, no matter how attractive it once seemed financially, can limit your options and put your business at risk.

Observe Meta, but don’t follow blindly

When my CIO friend asked about Meta’s giant agreement with Google, my answer was measured. There’s nothing wrong with watching the world’s biggest companies and learning from their strategies. But you must also recognize how different your reality likely is. Meta operates at a massive scale with a single-minded purpose that few other organizations can match. Its business is built for this sort of cloud deal.

For a typical large enterprise with layers of legacy technology, diverse workloads, and a mission that evolves year by year, a long-term, single-provider prepurchase agreement is almost certainly the wrong approach. Instead, focus on flexibility, continuously review your options, and negotiate from a position of strength. The goal should always be to adapt and thrive as new technologies, business models, and requirements emerge.

Behind any announcement about a multi-billion-dollar cloud deal is a simple truth: In technology, the only constant is change. Be prepared for it. Your future business will thank you for keeping all your options open.

JavaScript is the most egalitarian of languages. It is the common tongue of the Internet, with the lowest bar to entry. Just hit F12 in the browser, and there you go—you’re programming in JavaScript!

Despite (or maybe because of) its ease of use, JavaScript also has one of the most sprawling and complex ecosystems in the world of programming. After nearly 30 years of intensive refinement, the JavaScript language continues to see regular and significant improvements. As developers, we have a front-row seat to the evolution of the language in every ECMAScript specification release, not to mention the continuous stream of innovation in front-end JavaScript frameworks.

A less fortunate outcome of JavaScript’s universality is the recent spate of npm attacks, which were reported on InfoWorld last week.

Read on for these stories and more, in this month’s report.

Top picks for JavaScript readers on InfoWorld

Hands-on with Solid: Reactive programming with signals

Solid is one of the most influential front-end frameworks in the JavaScript universe, especially for its use of signals for state and reactivity. Solid is also celebrated for its developer experience (DX), with a clean design that can handle any requirement, from small projects to the enterprise. Add to that its reputation for excellent performance, and it is not hard to see why Solid is such a popular choice.

Hands-on with Svelte: Build-time compilation in a reactive framework

Svelte pioneered the compile-time optimization that has since become common among front-end JavaScript frameworks. This approach yields great performance because minimal code is sent across the wire, and the framework isn’t interpreted on the browser. All that is run is the JavaScript code. Svelte’s syntax is also super minimal, yet at times beautifully expressive.

ECMAScript 2025: The best new features in JavaScript

The attention grabber in this summer 2025 release is the new built-in Iterator object and its functional operators. Other updates include new Set methods, a new JSON module import, improvements to regular expressions, a Promise.try method for streamlining promise chains, and a new Float16Array typed array.

Video: How to build native desktop apps vs. web UI apps

When does it make sense to build a desktop app with native or semi-native UI components, versus going the web-UI route with a toolkit like Electron? This video demonstrates both approaches with pros and cons of each. (Also see the accompanying article: Native UI vs. web UI: How to choose.)

More good reads and JavaScript updates elsewhere

Angular summer update 2025

The Angular team shares a collection of updates to the framework in its dot release. Items include Zoneless APIs being production-ready, an (experimental) MCP server for LLM integration, and DevTool improvements, including Signal and router visualization. The section on AI is particularly interesting as it shows the framework’s extensive efforts to strengthen machine learning tie-ins that may be indicative of the future for the industry as a whole.

Next.js 15.5

The official Vercel announcement for NextJS 15.5. This release includes a --turobpack flag for the Next build command. Turbopack is a newer build tool, also made by Vercel. Turbopack is Rust-based and intended to improve build-times (this is currently a beta feature). Next’s support for Node middleware is also now stable. Other changes include Typescript enhancements and the deprecation of the next lint command–use ESlint instead (incidentally ESLint now has multithreading via worker threads).

JavaScript’s trademark problem

An interesting description of the state of the JavaScript trademark, which has remained under Oracle’s control since the tech giant purchased Sun Microsystems back in 2010. This gives you a lot of the background story and an update on the present lawsuit, spearheaded by Deno, to bring the JavaScript name and trademark into the public domain. It also tells the (rather lovely) origin tale for the now-famous JS Logo.

In January, assumptions around AI were shaken up by DeepSeek, a small Chinese company that nobody had heard of. This week it was Switzerland’s turn to stir things up.

Apertus (Latin for ‘open’) is a brand new large language model (LLM) that its creators, a group of Swiss universities in collaboration with the Swiss National Supercomputing Centre (CSCS), claim is one of the most powerful open-source AI platforms ever released.

Benchmarked as roughly on par with Meta’s Llama 3 model from 2024, Apertus is not the most powerful LLM out there, but it is still formidable.

Trained on 15 trillion tokens across using the 132-Nvidia H100 Alps CSCS supercomputer, it has been released on AI open source community site Hugging Face in an 8 billion parameter version for smaller-scale use, and a larger 70 billion parameter version suitable for research and commercial applications.

However, Apertus was created to be more than big, its makers said. Their ambition was to build something completely different to the ChatGPT AI mainstream, including better serving a global user base by training it on a wide range of non-English languages.

Fully open

The first interesting feature of Apertus is simply that it is Swiss. This might sound like a detail, but could yet be an advantage in an industry dominated by nations such as the US and China.

What’s being pitched here is an idea of sovereign or national AI in which Switzerland offers European and global users something more distinctive than Silicon Valley’s commercial AI models.

A major part of this will be the model’s open, ethical credentials. What this means is that anyone using it can get under the hood to see exactly how it was trained, in a way that is intended to be transparent and reproducible.

‘Openness’ in LLMs is contentious. For Meta’s Llama, for example, it refers to open weights, which means you can see how the LLM operates on data without seeing the data on which it was trained.

The Swiss, by contrast, prefer the idea of ‘full openness’ in which users can see everything, including training data and the 15 trillion token volume used by the model.

“Fully open models enable high-trust applications and are necessary for advancing research about the risks and opportunities of AI. Transparent processes also enable regulatory compliance,” said Imanol Schlag, a research scientist at the University of Zurich’s ETH AI Center who worked on the project.

This fully open nature would also be important for the organizations its makers believe might use it. One of the biggest enterprise concerns around using AI today is the domination of large, mainly US-based tech companies that risk pushing ethical and legal boundaries beyond their breaking point.

This is especially true in the EU, where enterprises must grapple with staying on the right side of the 2024 EU AI Act and General-Purpose AI Code of Practice. These have generated anxiety about compliance, on top of the more general worries that the strict standards the EU AI Act imposes might act as a brake on AI development in the EU.

“Particular attention has been paid to data integrity and ethical standards: the training corpus builds only on data which is publicly available,” said the Apertus announcement.

“It is filtered to respect machine-readable opt-out requests from websites, even retroactively, and to remove personal data, and other undesired content before training begins.”

This includes high compliance standards in terms of author rights, as well as around the ‘memorization’ of training data that might create privacy problems with mainstream models through reproduction of snippets of copyrighted or sensitive data.

Additionally, organizations that want to stay in control of their data have the option to download Apertus to their own servers.

However, as with all open models, if copyrighted text or personal data is later removed from the training data, there is no easy way to guarantee that downloaded versions of the model reflect this, beyond asking enterprises to monitor for upstream changes. Inevitably, this puts some pressure on enterprise AI governance, who might be held liable for compliance issues.

Need for speed

Despite the ethical appeal of Apertus, it will still need to compete with rivals in terms of AI inference. The notion that organizations needed to go to large closed-source LLM makers to get this was mistaken, according to Antoine Bosselut, assistant professor at École Polytechnique Fédérale de Lausanne (EPFL), which also collaborated on the Swiss LLM.

“Over the last few years, we heard this narrative that commercial LLM providers were light years ahead of anything that anybody else could create. What I hope we’ve shown here today is that that’s not necessarily the case and that this gap is far less wide than we had imagined,” he said in a promotional video.

“Apertus demonstrates that generative AI can be both powerful and open,” said Bosselut. “The release of Apertus is not a final step, rather it’s the beginning of a journey, a long-term commitment to open, trustworthy, and sovereign AI foundations, for the public good worldwide.”

More AI news and insights:

White House AI plan heavy on cyber, light on implementation

How the generative AI boom opens up new privacy and cybersecurity risks

Google has introduced Gemini 2.5 Flash Image, an image generation and editing model enabling capabilities including the blending of multiple images into a single image. Developers can use the model for multimodal creativity for visual apps.

Introduced August 26 and also identified as “Nano Banana,” Gemini 2.5 Flash Image enables developers to maintain character for consistency, make targeted transformations using natural language, and use Gemini knowledge to generate and edit images. The model is available via the Gemini API and Google AI Studio for developers and Vertex AI for enterprise. To assist with building with Gemini 2.5 Flash Image, Google has made updates to Google AI Studio’s build mode. Developers can quickly test the model’s capabilities with custom AI-powered apps and remix them or bring ideas to life with a single prompt, according to Google. Apps can be shared from Google AI studio or code saved to GitHub.

Gemini 2.5 Flash Image enables targeted transformation and precise local edits with natural language, Google said. For example, the model can blur the background of an image, remove a stain in a t-shirt, remove an entire person from a photo, alter a subject’s pose, add color to a black and white photo, all with a simple prompt. Key features of the model include:

- The ability to maintain the appearance of a character or object across multiple images and scenes, a significant challenge in generative AI.

- Users can make targeted edits to images.

- The model can blend multiple source images to create a single, photorealistic fused image.

- Leveraging the Gemini AI assistant, the model understands and incorporates real-world context and detail into image generation and editing tasks.

An update of the HTTP Client API, supporting the HTTP/3 protocol, has been added to Java Development Kit (JDK) 26, due in March 2026.

Officially announced on September 3, the HTTP/3 for the HTTP Client API feature is being targeted for JDK 26. This short-term release of Java will follow next month’s long-term support (LTS) release of JDK 25. Removal of the Java Applet API, now considered obsolete, is also targeted for JDK 26.

The HTTP/3 proposal calls for allowing Java libraries and applications to interact with HTTP/3 servers with minimal code changes. Goals include updating the HTTP Client API to send and receive HTTP/3 requests and responses; requiring only minor changes to the HTTP Client API and Java application code; and allowing developers to opt in to HTTP/3 as opposed to changing the default protocol version from HTTP/2 to HTTP/3.

HTTP/3 is considered a major version of the HTTP (Hypertext Transfer Protocol) data communications protocol for the web. Version 3 was built on the IETF QUIC (Quick UDP Internet Connections) transport protocol, which emphasizes flow-controlled streams, low-latency connection establishment, network path migration, and security among its capabilities.

JDK 26 is slated for just six months of Premier-level support from Oracle, while JDK 25 will have five years of this support. The current version of standard Java, JDK 24, also is set for just six months of Premier support. Other possible features for JDK 26 include features to be previewed in JDK 25, such as structured concurrency; primitive types in patterns, instanceof, and switch; and PEM (Privacy-Enhanced Mail) encodings of cryptographic objects. An experimental feature in JDK 25, JDK Flight Recorder CPU-time profiling, may also be included in JDK 26.

Graph database provider Neo4j has launched a new distributed graph architecture, Infinigraph, that will combine both operational (OLTP) and analytical (OLAP) workloads across its databases to help enterprises adopt agent-based automation for analytics.

The new architecture, which is currently available as part of Neo4j’s Enterprise Edition and will soon be available in AuraDB, uses sharding that distributes the graph’s property data across different members of a cluster.

“Enterprises are increasingly moving toward HTAP (Hybrid Transactional and Analytical Processing) to unify OLTP (operational) and OLAP (analytical) data. This convergence is becoming critical for enabling agentic AI, which depends on real-time decision-making,” said Devin Pratt, research director at IDC.

“Recent moves in the industry, such as Databricks’ acquisition of Neon and Snowflake’s acquisition of Crunchy Data, highlight how most vendors are aligning with this broader trend,” Pratt added.

Enterprises are progressively looking to adopt agentic AI or workflows — systems that can operate autonomously with minimal human intervention — for strategic reasons, such as resource optimization, operation efficiency, and scalability.

When it comes to analytical systems, enterprises that choose not to unify OLAP and OLTP are adding to unnecessary expenditure as they have to maintain more than one system and develop complex ETL pipelines to do analysis on all types of data, said Robert Kramer, principal analyst at Moor Insights and Strategy.

“Unifying workloads offers a single, reliable source of truth, reduces infrastructure overhead, and makes it easier to tackle complex tasks like fraud detection or customer recommendations,” Kramer added.

Sharding is key for Infinigraph. But can it sustain performance?

Neo4j’s use of sharding, or splitting the data among multiple nodes, has been a common technique to achieve scalability in relational databases.

However, applying sharding to graph databases is challenging because it can split related data across nodes, hurting performance, ISG Software Research’s director David Menninger pointed out.

“As a result, graph databases have not been as scalable as relational databases,” Menninger added.

On the contrary, Neo4j claims that its Infinigraph-infused databases will offer high performance over workloads and scale to over 100TB horizontally with zero rewrites.

However, Moor Insights and Strategy’s Kramer did not sound too confident of Neo4j’s Infinigraph-infused database offerings, at least in terms of performance.

“The key questions are whether Infinigraph can sustain performance under heavy mixed workloads and how well it integrates with existing enterprise systems. Customers will need to validate responsiveness and scalability in practice,” Kramer said.

Further, IDC’s Pratt pointed out that Neo4j has historically faced scrutiny over horizontal scalability, while competitors like TigerGraph demonstrated stronger scale-out performance.

Neo4j has been facing increasing competition, ISG’s Menninger pointed out.

It competes with Amazon Neptune, Azure CosmosDB, TigerGraph, Aerospike, SpannerGraph, and OrientDB, among others, in the graph database space, and nearly all data platform providers, as each of them offers graph database functionality, Menninger said.

“The real question is whether graph database specialists can offer a product that is differentiated enough to justify purchasing an additional data platform,” Menninger added.

Even if you love to code, there probably are times when you’d rather ask a question like, “What topics generated the highest reader interest this year?” than write an SQL query with phrases like STRFTIME(‘%&Y’, Date) = STRFTIME(‘%Y’, ‘now’). And, if your data set has dozens of columns, it’s nice to be able to avoid looking up or remembering the exact name of each one.

Generative AI and large language models (LLMs) ought to be able to help you “talk” to your data, since they excel at understanding language. But as any serious genAI user knows by now, you can’t necessarily trust a chatbot’s results—especially when it’s doing calculations.

One way around that is to ask a model to write the code to query a data set. A human can then check to make sure the code is doing what it’s supposed to do. There are various ways to get an LLM to write code for data analysis. One of the easiest for R and Python users is with the querychat package available for both languages.

Also see my quick look at Databot, an AI assistant for querying and analyzing data in R and Python.

Natural language querying with querychat

Querychat is a chatbot data-analysis component for the Shiny web framework. It translates a plain-language request into SQL, runs that SQL on your data, then displays both its result and the SQL code that generated it. Showing raw SQL code might not be ideal for the average user, but it’s great for programming-literate users who want to verify a model’s response.

A key advantage of the querychat workflow is that you never have to send your data to an LLM, or to the cloud. Instead, you define your data structure, the LLM writes SQL code based on your definition of the data’s columns, and then that code is run on your machine and displayed for you to review. That keeps your data private. Another benefit is that this can work equally well whether your data set has 20 rows or 2 million—the limit is what your local system can handle, not the LLM’s context window.

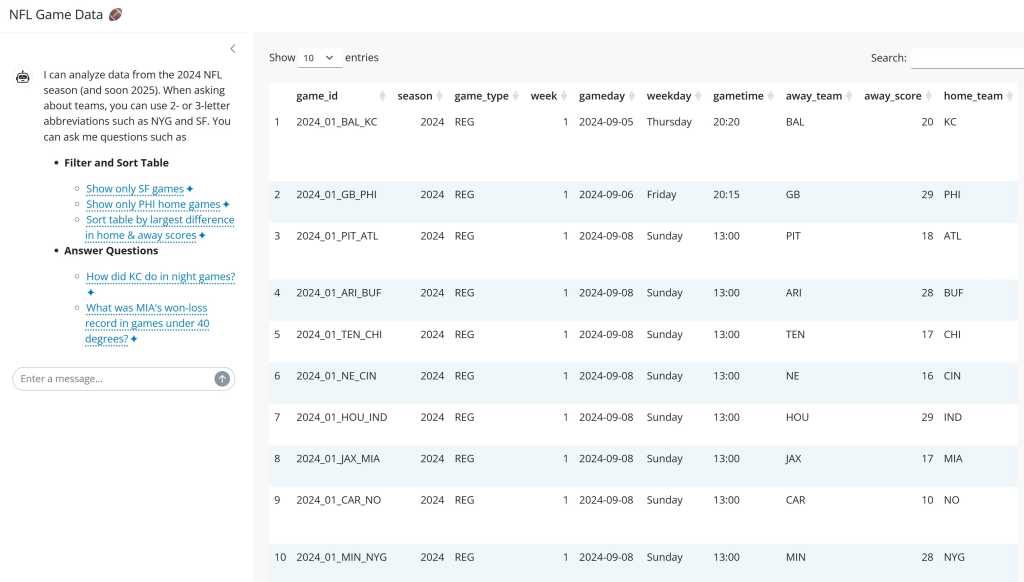

The querychat web app can answer questions about the data or filter the data display, all based on plain-language requests.

I’ll demonstrate how to use querychat with public data; namely, National Football League game results from last season (and this year when available). You can see examples for both R and Python. Let’s get started!

R with querychat

The first thing we’ll do is get and process the NFL data. For this example, I’ll use the nflverse suite of R packages to import the NFL game data into R. You can install it from CRAN with install.packages("nflverse").

In the code below, I load the nflreadr package from the nflverse as well as dplyr for basic data wrangling and feather for a file format used by both R and Python. You should also install these packages if you want to follow along and they’re not already on your system. Also, install querychat for R from GitHub with pak::pak("posit-dev/querychat/pkg-r").

I use nflreadr’s load_schedules() function to import data for the 2024 and 2025 NFL seasons, removing all games where the result is NA (representing games that are on the schedule but haven’t been played yet):

library(nflreadr)

library(dplyr)

library(feather)

game_data_all <- load_schedules(seasons = c(2024, 2025)) |>

filter(!is.na(result))Note that the nflverse game data is also available in CSV format for seasons since 1999. So, you could import it with your favorite CSV import function instead of using nflreadr. For example:

game_data_all <- rio::import(“https://raw.githubusercontent.com/nflverse/nfldata/refs/heads/master/data/games.csv”) |> filter(season %in% c(2024, 2025) & !is.na(result))The load_schedules() function returns a data frame with 46 variables for metrics including game time, temperature, wind, playing surface, outdoor or dome, point spreads, and more. Run print(dictionary_schedules) to see a data frame with a data dictionary of all the fields.

Now that I have the data, I need to process it. I’m going to remove some ID fields I know I don’t want and keep everything else:

cols_to_remove <- c("old_game_id", "gsis", "nfl_detail_id", "pfr", "pff",

"espn", "ftn", "away_qb_id", "home_qb_id", "stadium_id")

games <- game_data_all |>

select(-all_of(cols_to_remove))Although it’s obvious from the scores which teams won and lost, there aren’t actually columns for the winning and losing teams. In my tests, the LLM didn’t always write appropriate SQL when I asked about winning percentages. Adding team_won and team_lost columns makes that clearer for a model and simplifies the SQL queries needed. Then, I save the results to a feather file, a fast format for either R or Python:

games <- games |>

mutate(

team_won = case_when(

home_score > away_score ~ home_team,

away_score > home_score ~ away_team,

.default = NA

),

team_lost = case_when(

home_score > away_score ~ away_team,

away_score > home_score ~ home_team,

.default = NA

)

)

write_feather(games, "games.feather")Querying your data with querychat in R

You’ll likely get more accurate querychat results if you add a couple of optional arguments when setting up your chat, such as a description of your data columns. It’s better to explain what each of your data fields represents than have the model try to guess—the same as when you’re working with human colleagues!

The data description can be a Markdown or a text file, and you can use your judgment on how to structure the text. The advice from the documentation is, “Just put whatever information, in whatever format, you think a human would find helpful.”

Since nflreadr comes with a data dictionary for this data set, I started with that built-in dictionary_schedules data frame. I then deleted definitions of columns I removed from my data, converted the data frame into plain text, and saved that to a data_dictionary.txt file:

data_dictionary <- dictionary_schedules |>

filter(!(field %in% cols_to_remove) )

text_for_file <- paste0(data_dictionary$field, " (", data_dictionary$data_type, "): ", data_dictionary$description)

cat(text_for_file, sep = "\n", file = "data_dictionary.txt")Next, I opened and edited that file manually to add “This is data about recent National Football League games, one row per game” and definitions for my team_won and team_lost columns:

team_won (character): Name of winning team

team_lost (character): Name of losing teamYou should also include a greetings file with an initial greeting for the chatbot and a few sample questions. While that’s not required, greetings and sample questions will be generated for you if you don’t provide them, which costs both time and tokens. In fact, I received a warning in the console when I created an app without a greeting file: “For faster startup, lower cost, and determinism, please save a greeting and pass it to querychat_init().”

Sample questions use the format Your question here.

This is my greeting.md file, which includes how I want my chatbot to greet users and what sample questions I want to display:

I can analyze data from the 2024 NFL season (and soon 2025). When asking about teams, you can use 2- or 3-letter abbreviations such as NYG and SF. You can ask me questions such as

- **Filter and Sort Table**

- Show only SF games

- Show only PHI home games

- Sort table by largest difference in home & away scores

- **Answer Questions**

- How did KC do in night games?

- What was MIA's won-loss record in games under 40 degrees?The sample questions are formatted so that if you click on them in the app, the text pops into the chat text input.

Data to query, a data dictionary file, and a greeting file are enough to create a working chat infrastructure. The R code below uses querychat in a simple Shiny web app that lets you ask plain-language questions about the NFL data. Install any additional needed libraries not already on your system, such as shiny, bslib, and DT. It doesn’t look very pretty, but this version only needs approximately 20 lines of code:

library(shiny)

library(bslib) # Standard if you want updated Bootstrap

library(querychat)

library(DT)

library(feather)

games <- read_feather("games.feather")

# Create querychat configuration object

querychat_config <- querychat_init(

games,

data_description = readLines("data_dictionary.txt"),

greeting = readLines("greeting.md"),

# This is the syntax for setting a specific model:

create_chat_func = purrr::partial(ellmer::chat_openai, model = "gpt-4.1") )

ui <- page_sidebar(title = "NFL Game Data 🏈",

sidebar = querychat_sidebar("chat"),

DT::DTOutput("dt") )

server <- function(input, output, session) {

# Creates a querychat object using the configuration above

querychat <- querychat_server("chat", querychat_config)

output$dt <- DT::renderDT({

# querychat$df() is the filtered/sorted reactive data built into querychat

DT::datatable(querychat$df(), filter = 'none') # Set filter = 'top' if you want to filter table manually

})

}

shinyApp(ui, server)

Sharon Machlis

The app typically either filters/sorts the data or answers questions, but you can ask it to do both with a query like, “First show all PHI home games. Then tell me PHI home winning percentage.”

As with any Shiny app, you can customize the look and feel with cards, CSS, and more.

I preferred GPT-4.1 for this app compared with early tests using GPT-5. GPT-5 was slower and sometimes gave errors saying my account needed to be verified to use the model, even though it had already been approved.

Python with querychat

As we did in the previous example, the first step is to get and process the NFL data. If you work in both R and Python, you can use the feather data file from the previous example in the Python app, too. If you’d rather pull the data with Python, there’s a Python package nfl-data-py that imports nflverse data.

Below, I import data for the 2024 and 2025 seasons, drop ID columns I know I don’t want, delete rows without result values (scheduled but not played yet), and add columns for team_won and team_lost. The data wrangling script needs the nfl_data_py, pandas, numpy, and pyarrow packages. The app also needs chatlas, querychat, shiny, and python-dotenv.

During my testing, I occasionally had trouble installing querychat with uv add querychat, but uv pip install git+https://github.com/posit-dev/querychat.git was reliable.

This is the Python data wrangling code:

import nfl_data_py as nfl

import pandas as pd

import numpy as np

game_data_all_py = nfl.import_schedules(years=[2024, 2025])

columns_to_drop = [

'old_game_id', 'gsis', 'nfl_detail_id', 'pfr', 'pff', 'espn', 'ftn',

'away_qb_id', 'home_qb_id', 'stadium_id'

]

games_py = game_data_all_py.drop(columns=columns_to_drop)

games_py = games_py.dropna(subset=['result'])

conditions = [

games_py['home_score'] > games_py['away_score'],

games_py['away_score'] > games_py['home_score']

]

# Define the outcomes for the 'team_won' column

winner_outcomes = [

games_py['home_team'],

games_py['away_team']

]

# Define the outcomes for the 'team_lost' column

loser_outcomes = [

games_py['away_team'],

games_py['home_team']

]

# Create the new columns based on the just-defined conditions.

games_py['team_won'] = np.select(conditions, winner_outcomes, default=None)

games_py['team_lost'] = np.select(conditions, loser_outcomes, default=None)

games_py.reset_index(drop=True).to_feather("games_py.feather")There’s nothing specific to the programming language about the greeting.md file; you can either use the one I wrote for the R example or create your own.

Querying your data using querychat and Python

As mentioned previously, you’ll have better results if you provide greeting and data dictionary text files to your querychat.

Whereas R’s NFL package has a function to print out a data dictionary, I didn’t see one in the Python version. However, you can access the R function’s raw CSV dictionary data on GitHub. Here’s the Python code to generate a data dictionary text file from that CSV:

dictionary_url = "https://raw.githubusercontent.com/nflverse/nflreadr/1f23027a27ec565f1272345a80a208b8f529f0fc/data-raw/dictionary_schedules.csv"

dictionary_df = pd.read_csv(dictionary_url)

# Remove the columns not in the data frame

filtered_dictionary_df = dictionary_df[~dictionary_df['field'].isin(columns_to_drop)].copy()

# 3. Add the new team_won and team_lost columns

new_rows_data = {

'field': ['team_won', 'team_lost'],

'type': ['character', 'character'],

'description': ['Name of winning team', 'Name of losing team']

}

new_rows_df = pd.DataFrame(new_rows_data)

final_dictionary_df = pd.concat([filtered_dictionary_df, new_rows_df], ignore_index=True)

lines_for_file = [

f"{row['field']} ({row['type']}): {row['description']}"

for index, row in final_dictionary_df.iterrows()

]

with open("data_dictionary_py.txt", "w") as f:

f.write("\n".join(lines_for_file))Now we’re ready to use querychat in a Python Shiny app.

Below is code for a simple querychat Python Shiny app based on the querychat Python docs. I used an .env file to store my OpenAI API key.

Save the following code to app.py and launch the app with shiny run app.py. I ran it inside the Positron IDE with the Shiny extension installed; VS Code should work the same:

import chatlas

import querychat as qc

import pandas as pd

from shiny import App, render, ui

from dotenv import load_dotenv

from pathlib import Path

load_dotenv()

games = pd.read_feather("games_py.feather")

def use_openai_models(system_prompt: str) -> chatlas.Chat:

return chatlas.ChatOpenAI(

model="gpt-4.1",

system_prompt=system_prompt,

)

querychat_config = qc.init(

data_source=games,

table_name="games",

greeting=Path("greeting.md"),

data_description=Path("data_dictionary_py.txt"),

create_chat_callback= use_openai_models

)

# Create UI

app_ui = ui.page_sidebar(

qc.sidebar("chat"),

ui.output_data_frame("data_table"),

)

# Shiny server logic

def server(input, output, session):

# This create a querychat object using the configuration from above

chat = qc.server("chat", querychat_config)

# chat.df() is the filtered/sorted reactive data frame

@render.data_frame

def data_table():

return chat.df()

# Create Shiny app

app = App(app_ui, server)

You should now have a basic working Shiny app that answers questions about NFL data.

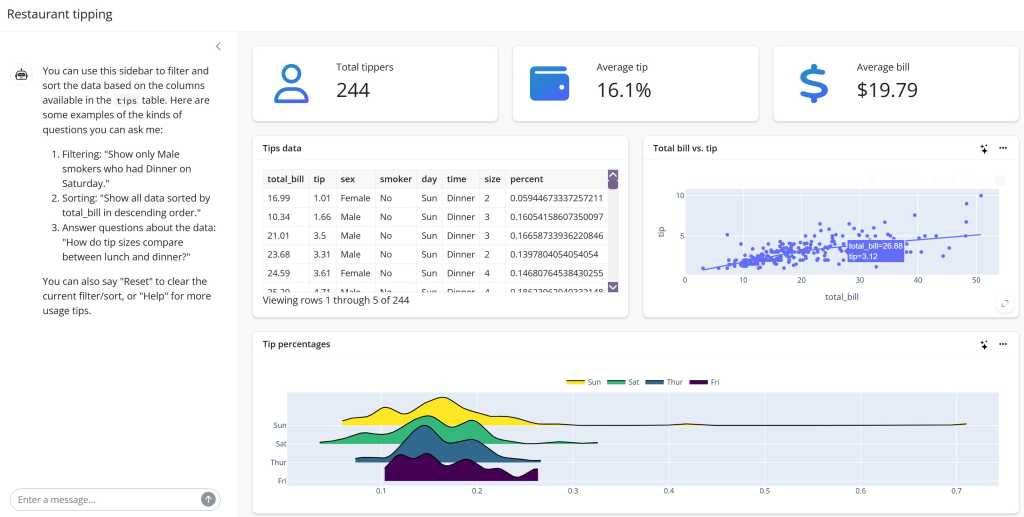

There’s a lot more you can do with these packages, including connecting to an SQL database and enhancing the Shiny application. Posit CTO Joe Cheng, the creator of querychat, demo’d an expanded Python version as a full-blown querychat dashboard app. There’s a template for it in the Shiny for Python gallery:

Sharon Machlis

There is also a demo repo for an R sidebot you can run locally by cloning the R sidebot GitHub repository. Or, you can examine the app.R file in the repository to get ideas for how you might create one.

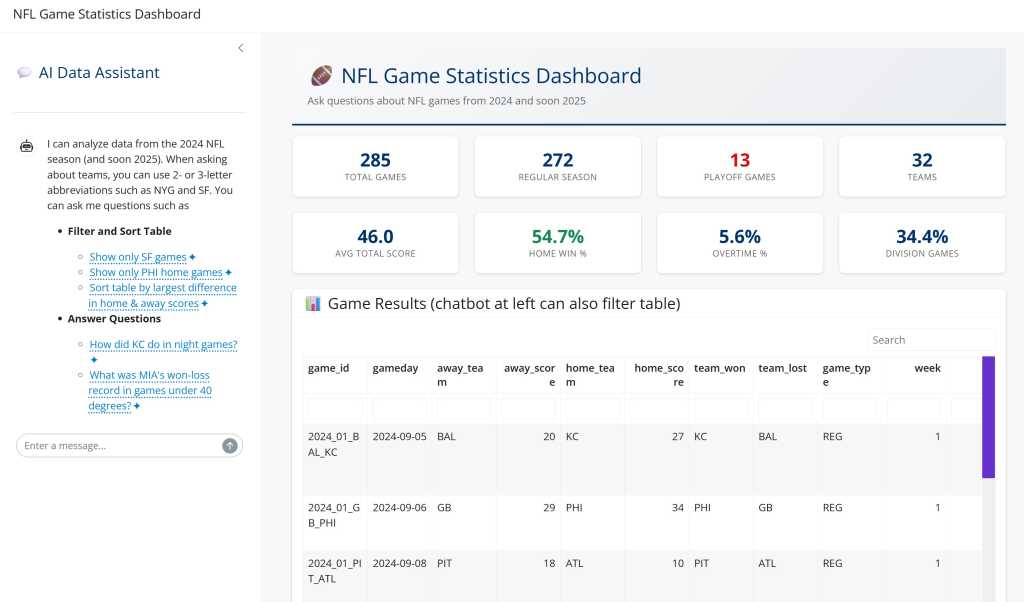

Rather than do the work myself, I had the Claude and Gemini chatbots build me a more robust version of the basic NFL Shiny app I’ve demo’d here. You can see the code for that application, shown below, in my querychat app repository.

Sharon Machlis

It’s taken some time for GitHub Spark, GitHub’s new AI-powered coding platform, to go beyond its initial small, closed beta. However, it’s now available to anyone with a GitHub CoPilot+ subscription, with the possibility of a wider future rollout. Unlike the standard GitHub Copilot, which acts as a smarter IntelliSense and as a virtual pair programmer that makes suggestions based on what you type, Spark is more like the tools built into Visual Studio Code’s Copilot Agent, using prompts to build answers to your questions and producing code that aims to implement your ideas.

This is pure vibe coding, as good as it gets, because although you can edit the GitHub Spark output in its code view, you’re much more likely to change or refine its prompts to get the application you want. Instead of starting with a design, you start with an idea and use the tools in Spark to turn it into code—quickly and without needing to interact with the generated JavaScript.

What’s it like to build in GitHub Spark?

I’ve been experimenting with the invite-only preview of the service on GitHub’s Next platform. I haven’t done any vibecoding before, only occasionally using GitHub Copilot to help me figure out the syntax for unfamiliar APIs, such as the one used to authorize access to Mastodon and then post content to it. It was interesting to go from an idea that began as this prompt, “an application to roll dice for a Dungeons & Dragons game; letting me pool multiple dice,” to one that let me change the color of the dice, save regularly reused dice combinations, and store a history of die rolls and results.

Each iteration is built on the generated code, using new prompts to refine design and features. I was able to see a preview of the application and the current code. Unfortunately, I didn’t have the option to save the code to a repository, though I could have easily cut and pasted it into Visual Studio Code or a similar editor and then saved it both locally and in GitHub. That may work to save your generated code, but it does rely on libraries and features provided by the underlying Spark platform, so it will not be fully portable outside of GitHub.

After five prompts, I had a web application I was happy with and I could share it with other GitHub Spark users. There are two sharing options: the default, with read-only access, and one that lets other users collaborate in the same design space. The JavaScript the preview generates uses the JSX format, so it offers a more structured view of your code, making it easier to read and understand.

Inside the GitHub Spark environment

The GitHub Spark development space is a web application with three panes. The middle one is for code, the right one shows the running app (and animations as code is being generated), and the left one contains a set of tools.

These tools offer a range of functions, first letting you see your prompts and skip back to older ones if you don’t like the current iteration of your application. An input box allows you to add new prompts that iterate on your current generated code, with the ability to choose a screenshot or change the current large language model (LLM) being used by the underlying GitHub Copilot service. I used the default choice, Anthropic’s Claude Sonnet 3.5. As part of this feature, GitHub Spark displays a small selection of possible refinements that take concepts related to your prompts and suggest enhancements to your code.

Other controls provide ways to change low-level application design options, including the current theme, font, or the style used for application icons. Other design tools allow you to tweak the borders of graphical elements, the scaling factors used, and to pick an application icon for an install of your code based on Progressive Web Apps (PWAs).

GitHub Spark has a built-in key/value store for application data that persists between builds and sessions. The toolbar provides a list of the current key and the data structure used for the value store. Click on a definition and you’re given an editable view of the currently stored data so you can remove unwanted content. If your code calls an LLM, you’ll see a list of the prompts it uses.

The final option is a set of user-specific settings, including a base prompt that can be used for all your applications. The settings help customize your application and add your own style to the AI-generated code. There’s a link to a GitHub-sponsored Discord server where you can chat with other users.

For the basic, prototype-style React app I built, I found the default settings worked well enough, and although I used a couple of the generated prompts, I did edit them before submitting so that they were better aligned to my intentions. As my refinements got more detailed, application generation took longer, though it took, at the most, three or four minutes for about 500 lines of React JavaScript—a lot less time than coding it myself.

An enterprise GitHub Spark

If you have a GitHub Copilot+ license ($39/month per user), you can use a more enterprise-friendly version of GitHub Spark. This builds on the preview version I was using, but instead of delivering JavaScript React code, it uses the more powerful TypeScript and integrates with a Codespace where you can edit and test code. There’s support for team working, using a repository to store and share code with two-way synchronization between the two environments.

Like the under-development version I was using, this release of GitHub Spark uses prompt-driven development with support for complex, multipart prompts. Again, you can refine the code through multiple prompt iterations and upload images to help lay out user interfaces. These can include sketches or even photographs of whiteboard designs.

Once code has been generated, you can test it, apply more iterations, or use the built-in code editor to make changes. You can use the preview pane to focus on specific display elements before switching to the editor to refine them. This lets you use the standard theme and style tools to edit their look and feel alongside the entire application. The generated code will include editable CSS for more targeted editing by designers. Outside of the built-in theme tools, you can upload your own visual assets, adding them to your app. The same tools as in the preview manage the built-in key/value store, along with any prompts used with AI integrations and third-party APIs.

Like many other prompt-based coding tools, there are limitations to GitHub Spark. Your subscription includes 375 Spark prompts and iterations a month, with added pay-as-you-go options for more prompts. These cost $0.16 for each additional prompt. There are no costs for storing and running published applications, though if you exceed the usage limits, it will block an application until your next billing cycle.

What is GitHub Spark good for?

There’s certainly a place for tools like this in your development toolkit, but as good as the code is, it’s no substitute for writing it yourself. It’s not “your code,” so you’re not familiar with the structure and the style. Editing and reusing code from GitHub Spark is like understanding a section of code from an open source project or any random stranger’s code. You need to first understand why the developer made certain decisions.

Where should GitHub Spark be used? The obvious place is as a prototyping tool, allowing you to quickly build a working example of your idea. It’s a great way to mock up applications and have a working back end with access to data and services, ready to demonstrate and share before spending the necessary time coding the final solution.

It manages to show the possibility of using tools like this to add front ends to applications built using no-code or low-code tools, like those provided by Microsoft’s Power Platform. A mix of user prompts and code analysis could quickly generate UI concepts, iterating to produce a final, shareable application.

Building on React and TypeScript, as GitHub Spark is doing, means it’s possible to take the generated code and add it to a repository where it can be modified by front-end teams, an example of Microsoft’s fusion team concept that mixes professional developers, stakeholders, subject matter experts, and low-code developers. The result is interesting: an AI-powered development tool that delivers well-designed, functional code. All that’s missing is a way to take your code and the GitHub Spark runtime and deploy it on your own systems—on premises or in the cloud.

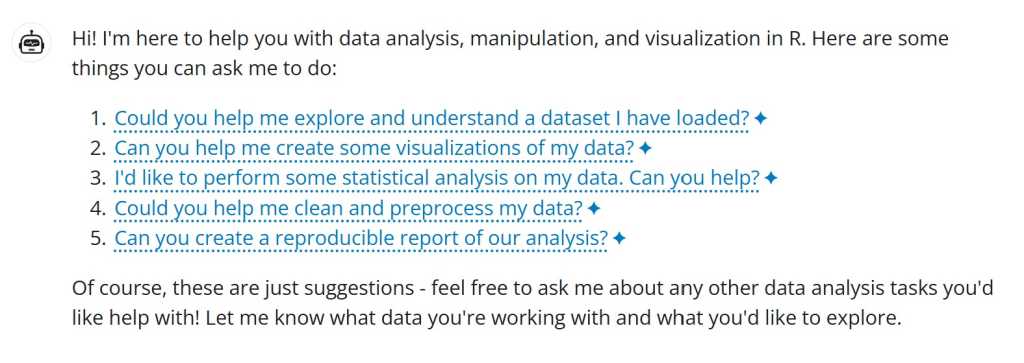

If you’d like an LLM to act more like a partner than a tool, Databot is an experimental alternative to querychat that also works in both R and Python. Databot is designed to analyze data you’ve imported into your working session and suggest questions you might want to ask. After you decide what to ask, Databot will write the code to generate your answer. Posit CTO Joe Cheng, creator of querychat, also wrote this package. It’s now available as an add-on for the Positron IDE.

Also see the introduction to querychat that accompanies this sidebar.

Databot is “an experimental AI assistant that is designed to come alongside you, and help you by performing tasks within your R session,” according to the R documentation. “Point Databot at some data and it will come up with plenty of ideas of how to analyze it.” It currently only uses Anthropic’s Claude Sonnet 3.5, so you’ll need an Anthropic API key to use it.

Note that when the docs say “experimental,” they mean it. “Pretty fragile currently,” the README warns.

With that in mind, I installed databot with remotes::install_github("jcheng5/databot"), loaded my NFL games data set and data dictionary file into my R session along with the package, and created the following chat:

games <- feather::read_feather("games.feather")

data_dictionary <- readLines("data_dictionary.txt")

databot::chat()A chatbot popped up asking what I’d like help with

Sharon Machlis

I asked for visualizations, and it responded:

There are many interesting variables we could visualize. Let me suggest a few possibilities:

Suggested next steps:

Can you create a histogram of game total scores to see the distribution of scoring?

Could you make a box plot comparing home vs away team scores?

Let's create a scatter plot of temperature vs total score to see if weather affects scoring.

Can you show me the distribution of betting spreads and how they relate to actual game results?

Could you create a visualization showing win/loss records by team?

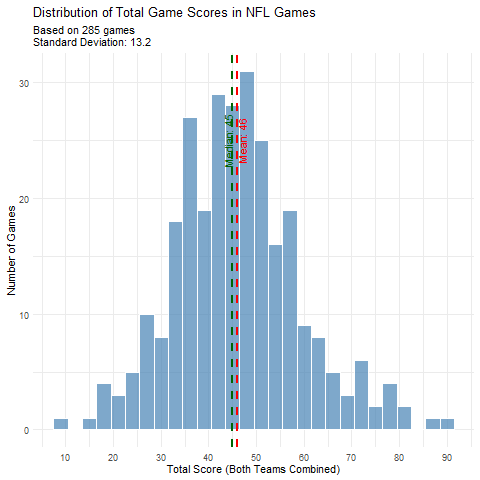

Which of these interests you, or would you like to explore something else in the data? I can help create any type of visualization you'd find useful!I asked for the weather versus scoring plot, and it wrote R code that unfortunately didn’t show an interesting trend. Next, I tried the histogram of total scoring, which returned R code, the bot’s observations, and a plot. Databot then provided the following analysis:

This visualization reveals several interesting patterns about NFL scoring:

1. The distribution looks approximately normal (bell-shaped), with some slight right skew

2. The mean total score is about 44.5 points

3. The median is very close to the mean at about 44 points

4. Most games fall between roughly 30 and 60 total points

5. There are a few outlier games with very high scoring (80+ points) and very low scoring (<20 points)

Sharon Machlis

While many mainstream chatbots have similar capabilities, few write and run R code like this; generative AI data analysis code is typically done in Python.

The Python version of Databot may be less compelling because of alternatives like ChatGPT’s Data Analyst. Agents may also have some similar capabilities. But if you are interested in using a chatbot specifically for data analysis in either R or Python, you can check out the Python Databot or R Databot GitHub repos.

Posit has also made Databot available as an add-on for its Positron IDE, which is a convenient way to use the tool in a data science workflow. Users must acknowledge that they understand it is an experimental research preview in order to use it, but you do have the power to steer its analysis and check its code.

Sharon Machlis

Two supplemental point releases of the Angular 20 web framework have added production-readiness for zoneless APIs and an experimental MCP (Model Context Protocol) server.

An August 30 blog post from the Angular team announced updates and improvements in Angular 20.1 and 20.2. A highlight, zoneless APIs, became stable and production-ready in Angular 20.2. This update makes using Angular without Zones.js an option, addressing issues such as difficulty in application debugging and with larger bundle sizes. The MCP server, meanwhile, helps with experimental LLM code generation. The initial launch includes tools for searching documentation for best practices to improve LLM code generation and getting project metadata.

Angular’s 2025 summer update included these additional improvements:

- Angular apps now can be generated in the Gemini Canvas and Google AI Studio tools.

- Two new primitives,

animate.enterandanimate.leave, support configuring which CSS classes should be used for the “enter” and “leave” animations. - The Mat Menu component was updated for use as a context menu.

- Template authoring was enhanced with capabilities such as a more-ergonomic syntax for ARIA attributes and better support for class names used by Tailwind.

- A new

currentNavigationsignal property is available on the router. - TypeScript 5.9 support was added.

OpenAI is acquiring Washington-based product development platform providing startup Statsig for $1.1 billion to speed up its generative AI-based product launches and accelerate iteration cycles of existing products such as Codex and ChatGPT.

The acquisition could be a shot in the arm for the ChatGPT-maker as rivals, such as Google, AWS, and Anthropic, aggressively vie for more market share.

[ Related: More OpenAI news and insights ]

OpenAI products, such as Codex and ChatGPT, which it often terms as applications, compete with Google’s Gemini-based offerings, Anthropic’s Claude-based offerings, and AWS’s Amazon Q and Kiro.

The market for AI assistants is expected to expand from $3.35 billion in 2025 to $21.11 billion by 2030, according to a report from MarketsAndMarkets.com. A separate report from Statista showed that revenue in the AI development tool software market is projected to reach $9.76 billion in 2025 and $12.99 billion by 2030.

Statsig’s technology stack, according to Forrester VP and principal analyst Charlie Dai, will strategically help OpenAI, as accelerated product development cycles could help the ChatGPT-maker get an edge over rivals.

“Statsig’s core value lies in its real-time experimentation and feature flagging tools. It allows teams to test, measure, and iterate on product features quickly and safely. OpenAI can refine ChatGPT and Codex features with precision, reducing time-to-market,” Dai explained.

Additionally, Dai pointed out that Statsig will bring a structured, data-driven product iteration approach to OpenAI — an attribute that the analyst said is missing across OpenAI rivals.

As part of the acquisition, Statsig CEO Vijaye Raji will step into a new role as CTO of Applications — a division of OpenAI that Sam Altman opened in May by placing former Instacart CEO Fidji Simo in charge.

Raji, who previously led large-scale consumer engineering at Meta, will report to Simo, who in turn will continue to report to Altman.

“As a hands-on builder and trusted leader, Vijaye will head product engineering for ChatGPT and Codex, with responsibilities that span core systems and product lines, including infrastructure and Integrity,” the company wrote in a blog post.

Notably, both ChatGPT and Codex, over the last month, have seen significant updates with the AI assistant getting access to GPT-5 and the coding assistant getting new capabilities.

The new Codex features include a new IDE extension, the ability to move tasks between the cloud and local environment, code reviews in GitHub, and a revamped Codex CLI (command line interface).

OpenAI said that although Statsig employees will become in-house staff post-acquisition, they will continue to serve their customers out of Seattle, at least for the foreseeable future.

“We’ll take a measured approach to any future integration, ensuring continuity for current customers and enabling the team to stay focused on what they do best,” OpenAI wrote.

OpenAI has not provided a timeframe as to when it expects the deal to close.

The Java virtual machine (JVM) is a program whose sole purpose is to execute other programs. This simple idea has made Java one of the most successful and long-lived platforms of all time.

The JVM was a highly novel idea when it was introduced in 1995, and it continues to be a vital force in programming innovation today. This article introduces the Java virtual machine, what it does, and how it works in your programs.

What does the JVM do?

The JVM has two primary functions: to allow Java programs to run on any device or operating system (known as the “write once, run anywhere” principle), and to manage and optimize program memory. When Java was released in 1995, all computer programs were written to a specific operating system, and program memory was managed by the software developer. It’s not hard to see why the JVM was a revelation in that era.e software developer. It’s not hard to see why the JVM was a revelation in that era.

Having a technical definition for the JVM is useful, and there’s also an everyday way that software developers think about it. We can break that down to make it clear:

- Technical definition: The JVM is the specification for a software program that executes code and provides the runtime environment for that code.

- Everyday definition: The JVM is how we run our Java programs. We configure the JVM settings (or use the defaults) and then tell it to execute our application code.

When developers talk about the JVM, we sometimes mean in general the infrastructure that the Java platform provides to our code. The JVM is specifically the runtime part of that infrastructure.

Often, we are referring to the actual process running on a machine, for example, when we use the jps command to locate the JVM process running on a server. We can use that command to examine the resource usage for a Java application running inside the JVM.

Compare that to the JVM specification, which describes the requirements for building a program that performs those tasks. Usually, we refer to that as “the JVM spec” rather than “the JVM,” which refers to the tool we use in our daily work.

JVM languages

While it was once only for Java, many popular and well-known languages now run in the Java virtual machine.

Among the most known are Scala, used for real-time, concurrent applications, and Groovy, a dynamically typed scripting language. Another prominent example is Kotlin, which delivers a blend of object-oriented and functional styles. Other examples include Jython, JRuby, and Clojure.

All of these are considered JVM languages. Even though a developer might not be coding in Java, they retain access to the Java platform’s flexibility, stability, and vast ecosystem of libraries.

Even more polyglot power is driven by projects like GraalVM, which we can use to run JavaScript and WebAssembly on the JVM.

How is the JVM developed and maintained?

The OpenJDK project started with Sun Microsystems’ decision to open-source Java and has continued through Oracle’s stewardship. Today, most of the development of the Java platform (including the JVM) is channeled through the Java Community Process (JCP). Within the JCP, new features and revisions are proposed as Java specification requests (JSRs). For the actual implementation details and enhancements within the OpenJDK project, Java enhancement proposals (JEPs) are used to track and guide specific changes to the JDK, which includes the JVM.

Garbage collection

If we take a wide view, the most common interaction with a running JVM is to check the memory usage in the heap and stack. The most common adjustment is performance-tuning the JVM’s memory settings.

Before Java, all program memory was managed by the programmer. In Java, program memory is managed by the JVM. It’s thanks to the JVM that you don’t have to manually allocate and dispose of memory in Java.

The JVM manages memory through a process called garbage collection, which continuously identifies and eliminates unused memory in Java programs. Garbage collection happens inside a running JVM.

In the early days, Java came under criticism for not being as “close to the metal” as C++, and therefore not as fast. The garbage collection process was especially controversial. Since then, a variety of algorithms and approaches have been proposed and used for garbage collection. With consistent development and optimization, garbage collection has vastly improved. (Automatic memory management also caught on and is a common feature of other modern languages like JavaScript and Python.)

The three parts of the JVM

Broadly speaking, there are three parts to the JVM: specification, implementation, and instance. Let’s consider each part.

The JVM specification

First, the JVM is a software specification. In a somewhat circular fashion, the JVM spec highlights that its implementation details are not defined within the spec, to allow for maximum creativity in its realization:

To implement the Java virtual machine correctly, you need only be able to read the

classfile format and correctly perform the operations specified therein.

J.S. Bach once described creating music similarly:

All you have to do is touch the right key at the right time.

So, all the JVM has to do is make class files behave correctly. Sounds simple, and might even look simple from the outside, but it is a massive undertaking, especially given the power and flexibility of the Java language.

JVM implementations

Implementing the JVM specification results in an actual software program, which is a JVM implementation. In fact, there are many JVM implementations, both open source and proprietary. OpenJDK’s HotSpot is the JVM reference implementation. It remains one of the most thoroughly tried-and-tested codebases in the world.

HotSpot may be the most commonly used JVM, but it is by no means the only one. Another interesting and popular implementation, already mentioned, is GraalVM, which features high performance and support for other, traditionally non-JVM languages like C++ and Rust via the LLVM spec. There are also domain-specific JVMs like the embedded robotics JVM, LeJOS.

Another popular JVM implementation is OpenJ9, original from IBM, now an Eclipse project.

Typically, you download and install the JVM as a bundled part of a Java Runtime Environment (JRE). The JRE is the on-disk part of Java that spawns a running JVM.

A JVM instance

After the JVM spec has been implemented and released as a software product, you may download and run it as a program. That downloaded program is an instance (or instantiated version) of the JVM.

Most of the time, when developers talk about “the JVM,” we are referring to a JVM instance running in a software development or production environment. You might say, “Hey Anand, how much memory is the JVM on that server using?” or, “I can’t believe I created a circular call and a stack overflow error crashed my JVM. What a newbie mistake!”

How the JVM loads and executes class files

We’ve talked about the JVM’s role in running Java applications, but how does it perform its function? To run Java applications, the JVM depends on the Java class loader and a Java execution engine.

The Java class loader

Everything in Java is a class, and all Java applications are built from classes. An application could consist of one class or thousands. To run a Java application, a JVM must be started, and it then loads the compiled .class files into its runtime context. A JVM depends on its class loader to perform this function.

When you type java classfile, you are saying: start a JVM instance and load the named class into it.

The Java class loader is the part of the JVM that loads classes into memory and makes them available for execution. Class loaders use techniques like lazy-loading and caching to make class loading as efficient as it can be. That said, class loading isn’t the epic brainteaser that (say) portable runtime memory management is, so the techniques are comparatively simple.

Every Java virtual machine includes a class loader. The JVM spec describes standard methods for querying and manipulating the class loader at runtime, but JVM implementations are responsible for fulfilling these capabilities. From the developer’s perspective, the underlying class loader mechanism is a black box.

The execution engine

Once the class loader has done its work of loading classes, the JVM begins executing the code in each class. The execution engine is the JVM component that handles this function. The execution engine is the essential piece in the running JVM. In fact, for all practical purposes, it is the JVM instance.

Executing code involves managing access to system resources. The JVM execution engine stands between the running program—with its demands for file, network, and memory resources—and the operating system, which supplies those resources.

System resources can be divided into two broad categories: memory and everything else. Recall that the JVM is responsible for obtaining memory and disposing of it when it’s unused, and that garbage collection is the mechanism of that disposal.

So the JVM’s execution engine is responsible for taking the new keyword in Java, and turning it into an operating system-specific request for memory allocation. When the JVM detects that memory is no longer accessed by the program, it can schedule it for deallocation (see this tutorial for more about the details in that process).

The JVM is also responsible for allocating and maintaining the referential structure that the developer takes for granted.

Beyond memory, the execution engine manages resources for file system access and network I/O. Since the JVM is interoperable across operating systems, this is no mean task. In addition to each application’s resource needs, the execution engine must be responsive to each operating system environment. That is how the JVM is able to handle in-the-wild demands.

For example, when you grab a file handle in code, the JVM does the work of negotiating that in terms specific to the operating system you are using.

JVM evolution: Past, present, future

Because the JVM is a well-known runtime with standardized configuration, monitoring, and management, it is a natural fit for containerized development using technologies such as Docker and Kubernetes. It also works well for platform-as-a-service (PaaS), and supports a variety of serverless approaches. Because of all of these factors, the JVM is well-suited to microservices architectures and cloud environments. Java and other JVM languages have been fully adopted into cloud-native architectures thanks to the JVM.

More recently, Java’s stewards have integrated virtual threads into the JVM. This means you can easily adopt light-weight concurrency in your applications and let the JVM deal with orchestrating the OS threads in the most efficient way possible.

The JVM is also well adapted and evolving in the age of AI.

Conclusion

In 1995, the JVM introduced two revolutionary concepts that have since become standard fare for modern software development: “Write once, run anywhere” and automatic memory management. Software interoperability was a bold concept at the time, but few developers today would think twice about it. Likewise, whereas our engineering forebears had to manage program memory themselves, my generation grew up with garbage collection.

We could say that James Gosling and Brendan Eich invented modern programming, but thousands of others have refined and built on their ideas over the following decades. Whereas the Java virtual machine was originally just for Java, today it has evolved to support many languages and technologies. Looking forward, it’s hard to see a future where the JVM isn’t a prominent part of the development landscape.

When we talk about a “desktop application,” we generally mean a program that runs with a graphical UI that’s native to the platform or powered by some cross-platform visual toolkit. But a desktop application these days is just as likely to be a glorified web page running in a standalone instance of a browser.